机器学习——上机实验10--支持向量机

上机实验10–支持向量机

任务1:sklearn中的SVC与惩罚系数C

- 提供一份糖尿病患者数据集diabetes.csv,该数据集有768个数据样本,9个特征(最后一列为目标特征数据),并且已经存入变量data。特征的具体信息如下:

| 特征名称 | 特征含义 | 取值举例 |

|---|---|---|

| feature1 | 怀孕次数 | 6 |

| feature2 | 2小时口服葡萄糖耐受实验中的血浆葡萄浓度 | 148 |

| feature3 | 舒张压 (mm Hg) | 72 |

| feature4 | 三头肌皮褶厚度(mm) | 35 |

| feature5 | 2小时血清胰岛素浓度 (mu U/ml) | 0 |

| feature6 | 体重指数(weight in kg/(height in m)^2) | 33.6 |

| feature7 | 糖尿病谱系功能(Diabetes pedigree function) | 0.627 |

| feature8 | 年龄 | 50 |

| class | 是否患有糖尿病 | 1:阳性;0:阴性 |

主要任务如下: - 请先将数据使用sklearn中的StandardScaler进行标准化; - 然后使用sklearn中的svm.SVC支持向量分类器,构建支持向量机模型(所有参数使用默认参数),对测试集进行预测,将预测结果存为pred_y,并对模型进行评价; - 最后新建一个svm.SVC实例clf_new,并设置惩罚系数C=0.3,并利用该支持向量分类器对测试集进行预测,将预测结果存为pred_y_new,并比较两个模型的预测效果。

待补全代码

1 | import pandas as pd |

[187 180]

precision recall f1-score support

0 0.82 0.90 0.86 107

1 0.70 0.55 0.62 47

accuracy 0.79 154

macro avg 0.76 0.73 0.74 154

weighted avg 0.78 0.79 0.78 154

[197 196]

precision recall f1-score support

0 0.83 0.92 0.87 107

1 0.75 0.57 0.65 47

accuracy 0.81 154

macro avg 0.79 0.75 0.76 154

weighted avg 0.81 0.81 0.80 154

预期结果

任务2:SVC选定RBF核函数,并寻优核带宽参数gamma

在支持向量分类器中,核函数对其性能有直接的影响。已知径向基函数 RBF 及核矩阵元素为: K(xi, xj) = exp (−γ∥xi − xj∥2) 且对于核矩阵K,有Kij = K(xi, xj).

主要任务如下: - 自定义函数实现径向基函数 rbf_kernel,要求输入参数为两个矩阵 X、Y,以及 gamma; - 利用rbf_kernel核函数,计算标准化后的训练集scaled_train_X的核矩阵,并存为 rbf_matrix; - 利用rbf_kernel核函数,训练支持向量分类器 clf,并预测标准化后的测试数据 scaled_test_X 的标签,最后评价模型效果。 > 提示:先计算各自的 Gram 矩阵,然后再使用 np.diag 提取对角线元素,使用 np.tile 将列表扩展成一个矩阵。

待补全代码

1 | import numpy as np |

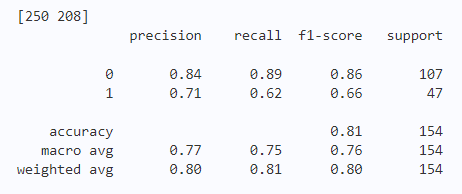

[250 208]

precision recall f1-score support

0 0.84 0.89 0.86 107

1 0.71 0.62 0.66 47

accuracy 0.81 154

macro avg 0.77 0.75 0.76 154

weighted avg 0.80 0.81 0.80 154预期结果

任务3:自定义函数实现SVM(选做)

主要任务如下: - 读取sklearn中的iris数据集,提取特征与标记,并进行数据划分为训练与测试集; - 自定义函数实现SVM; - 调用SVM函数进行支持向量机训练,并对测试集进行测试。

待补全代码

1 | import numpy as np |

1 | class SVM: |

1 | # 调用SVM进行模型训练与测试评估 |

0.921 |